. and. Department of Psychology, Institute for Intelligent Systems, University of Memphis, Memphis, TN, USAThere is increasing evidence from response time experiments that language statistics and perceptual simulations both play a role in conceptual processing.

In an EEG experiment we compared neural activity in cortical regions commonly associated with linguistic processing and visual perceptual processing to determine to what extent symbolic and embodied accounts of cognition applied. Participants were asked to determine the semantic relationship of word pairs (e.g., sky – ground) or to determine their iconic relationship (i.e., if the presentation of the pair matched their expected physical relationship). A linguistic bias was found toward the semantic judgment task and a perceptual bias was found toward the iconicity judgment task.

More importantly, conceptual processing involved activation in brain regions associated with both linguistic and perceptual processes. When comparing the relative activation of linguistic cortical regions with perceptual cortical regions, the effect sizes for linguistic cortical regions were larger than those for the perceptual cortical regions early in a trial with the reverse being true later in a trial. These results map upon findings from other experimental literature and provide further evidence that processing of concept words relies both on language statistics and on perceptual simulations, whereby linguistic processes precede perceptual simulation processes. IntroductionConceptual processing elicits perceptual simulations. For instance, when people read the word pair sky – ground, one word presented above the other, processing is faster when sky appears above ground than when the words are presented in the reversed order (;; ).

Embodiment theorists have interpreted this finding as evidence that perceptual and biomechanical processes underlie cognition (; ). Indeed, numerous studies show that processing is affected by tasks that invoke the consideration of perceptual features (see;;; for overviews). Much of this evidence comes from behavioral response time (RT) experiments, but there is also evidence stemming from neuropsychological studies (;; ). This embodied cognition account is oftentimes presented in contrast to a symbolic cognition account that suggests conceptual representations are formed from statistical linguistic frequencies. Such a symbolic cognition account that uses the mind-as-a-computer metaphor has occasionally been dismissed by embodiment theorists.Recently, researchers have cautioned pitting one account against another, demonstrating that symbolic and embodied cognition accounts can be integrated (;,; ). For instance, proposed the Symbol Interdependency Hypothesis, arguing that language encodes embodied relations which language users can use as a shortcut during conceptual processing.

The relative importance of language statistics and perceptual simulation in conceptual processing depends on several variables, including the type of stimulus presented to a participant, and the cognitive task the participant is asked to perform. Further found that the effects for language statistics on processing times temporally preceded the effects of perceptual simulations on processing times, with fuzzy regularities in linguistic context being used for quick decisions and precise perceptual simulations being used for slower decisions. Importantly, these studies do not deny the importance of perceptual processes. In fact, individual effects for perceptual simulations were also seen early on in a trial, however, when comparing the effect sizes of language statistics and perceptual simulations, found evidence for early linguistic and late perceptual simulation processes.The results from these RT studies, however, only indirectly demonstrate that language statistics and perceptual simulation are active during cognition, because the effects are modulated by hand movements and RTs. Although such methods are methodologically valid, we sought to establish whether such conclusions were also supported by neurological evidence.In the current paper our objective was to determine when conceptual processing uses neurological processes best explained by language statistics relative to neurological processes best explained by perceptual simulations. Given the evidence that both statistical linguistic frequencies and perceptual simulation are involved in conceptual processing (;; ), and that the effect for language statistics outperforms the effect for perceptual simulations for fast RTs, with the opposite being true for slower RTs , we predicted that cortical regions commonly associated with linguistic processing, when compared with activation in cortical regions commonly associated with perceptual simulation, would be activated relatively early in a RT trial. Conversely, when compared with activation in cortical regions commonly associated with linguistic processing, cortical regions associated with perceptual simulation were predicted to show greater activity relatively later in a RT trial.

Further, we predicted activation would be modified by the cognitive task, such that perceptual cortical regions would be more active in a perceptual simulation task, whereas linguistic cortical regions would be more active in a semantic judgment task.Traditional EEG methodologies are not quite sufficient to answer this research question. For instance, event-related potential (ERP) methods only allow for analyses of time-locked components that activate in response to specific events over numerous trials (; ). EEG recordings combined with magnetoencephalography (MEG) recordings can provide high-resolution temporal information and spatial estimates of neural activity, provided that appropriate source reconstruction techniques are used.

However, this technique establishes whether and when cortical regions are activated, but does not answer the question of what cortical regions are activated in relation to each other. Such a comparative analysis seems to call for a different and novel method.We utilized source localization techniques in conjunction with statistical analyses to determine when and where relative effects of linguistic and perceptual processes occurred. We did this by investigating which regions of the cortex are responsible for activity throughout the time course of each trial.

However, source localization determines only where differences emerge between conditions at specific points in time; our goal was to determine whether relatively stronger early effects of linguistic processes preceded a relatively stronger later simulation process. Consequently, we used established source localization techniques to determine where differences in activation were present during an early versus a late time period. With that information we then ran a mixed effects model on electrode activation throughout the duration of a trial to identify the effect size for activation of linguistic versus perceptual cortical regions over time. This type of analysis is progressive in that it allowed us not only to determine that activation differed between linguistic and perceptual cortical regions but also allowed us to gain insight into the relative effect size of language statistics and perceptual simulation as they contribute to conceptual processing throughout the time course of a trial. Materials and Methods ParticipantsThirty-three University of Memphis undergraduate students participated for extra credit in a psychology course.

All participants had normal or corrected vision and were native English speakers. Fifteen participants were randomly assigned to the semantic judgment condition, and 18 participants were randomly assigned to the iconicity judgment condition. MaterialsEach condition consisted of 64 iconic/reverse-iconic word pairs extracted from previous research (;; see Appendix). Thirty-two pairs with an iconic relationship were presented vertically on the screen in the same order they would appear in the world (i.e., sky appears above ground).

Likewise, 32 pairs with a reverse-iconic relationship appeared in an order opposite of that which would be expected in the world (i.e., ground appears above sky). The remaining 128 trials contained filler word pairs that had no iconic relationship. Half of the fillers had a high semantic relation (cos = 0.55) and half had a low semantic relation (cos = 0.21), as determined by latent semantic analysis (LSA), a statistical, corpus-based, technique for estimating semantic similarities on a scale of −1 to 1. All items were counterbalanced such that all participants saw all word pairs, but no participant saw the same word pair in both orders (i.e., both the iconic and the reverse-iconic order for the experimental items). EquipmentAn Emotiv EPOC headset (Emotiv Systems Inc., San Francisco, CA, USA) was used to record electroencephalograph data. EEG data recorded from the Emotiv EPOC headset is comparable to data recorded by traditional EEG devices (; ).

For instance, patterns of brain activity from a study in which participants imagined pictures were comparable between the 16-channel Emotiv EPOC system and the 32-channel ActiCap system (Brain Products, Munich, Germany; ). The Emotiv EPOC is also able to reliably capture P300 signals (; ), even though the accuracy of high-end systems is superior.The headset was fitted with 14 Au-plated contact-grade hardened BeCu felt-tipped electrodes that were saturated in a saline solution. Although the headset used a dry electrode system, such technology has shown to be comparable to traditional wet electrode systems. The headset used sequential sampling at 2048 Hz and was down-sampled to 128 Hz. The incoming signal was automatically notch filtered at 50 and 60 Hz using a 5th order sinc notch filter. The resolution was 1.95 μV.

ProcedureIn both the semantic judgment and iconicity judgment conditions, word pairs were presented vertically on an 800 × 600 computer screen. In the semantic judgment condition, participants were asked to determine whether a word pair was related in meaning. In the iconicity judgment condition, participants were asked whether a word pair appeared in an iconic relationship (i.e., if a word pair appeared in the same configuration as the pair would occur in the world). Participants responded to stimuli by pressing designated yes or no keys on a number pad. Participants were instructed to move and blink as little as possible. Word pairs were randomly presented for each participant in order to negate any order effects. To ensure participants understood the task, a session of five practice trials preceded the experimental session.

ResultsWe followed prior research (; ) in identifying errors and outliers. As in those studies, error rates were expected to be high in both the semantic judgment task and the iconicity task.

Although some word pairs may share a low semantic relation according to LSA, sometimes for at least one word meaning, a higher semantic relationship might be warranted (see ). For example, according to LSA, rib and spinach has a low semantic relation (cos = 0.07), but in one meaning of rib (that of barbecue) such a low semantic relation is not justified. For the semantic judgment task, error rates were unsurprisingly approximately 25% ( M = 26.07, SD = 7.51). Similarly, for the iconicity judgment condition, error performance can also be explained by the task.

Priest and flag are not assumed to have an iconic relation, even though such a relation could be imagined. Error rates were around 25–30% ( M = 29, SD = 8.53). For both the semantic judgment condition and the iconicity judgment condition, these error rates were comparable with those reported elsewhere. Analyses of the errors revealed no evidence for a speed-accuracy trade-off. In the RT analysis, data from each subject whose RTs fell more than 2.5 SD from the mean per condition, per subject, were removed from the analysis, affecting less than 3% of the data in both experiments.A mixed effects regression analysis was conducted on RTs with order ( sky above ground or ground above sky) as a fixed factor and participants and items as random factors (; ).

F-test denominator degrees of freedom for RTs were estimated using the Kenward–Roger’s degrees of freedom adjustment to reduce the chances of Type I error. For the semantic judgment condition, differences were found between the iconic and the reverse-iconic word pairs F(1, 2683.75) = 3.7, p = 0.05, with iconic word pairs being responded to faster than reverse-iconic word pairs, M = 1592.92, SE = 160.46 versus M = 1640.06, SE = 159.8. A similar result was obtained for the iconicity judgment condition, F(1, 3332.39) = 13.58, p.

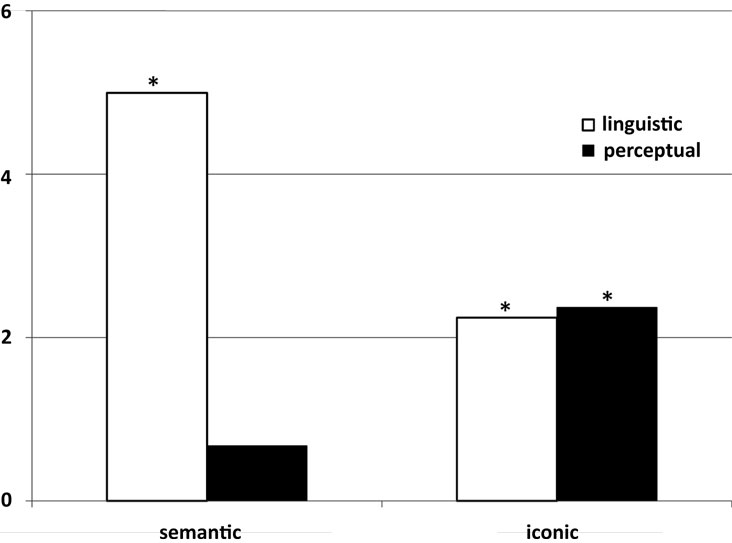

Response TimesFor the iconicity judgment condition, a mixed effects regression showed statistical linguistic frequencies again significantly predicted RT, F(1, 945.78) = 5.03, p = 0.03, with higher frequencies yielding faster RTs. Iconicity ratings also yielded a significant relation with RT, F(1, 947.65) = 5.61, p = 0.02, with higher iconicity ratings yielding lower RTs (see the second two bars in Figure; Table ).Figure shows that statistical linguistic frequencies explained RTs in both the semantic judgment and the iconicity judgment conditions, but the effect was stronger in the semantic judgment than in the iconicity judgment condition. Figure and Table also show the opposite results for perceptual simulation in that during the semantic judgment condition, the effect of perceptual simulation on RT was limited (and not significant).

However, in the iconicity judgment condition, perceptual simulation was significant. The interaction for linguistic frequencies and condition (semantic versus iconic) was significant, F(2, 1005.05) = 15.88, p. To complement the pattern observed in Figure in both our RT data and in the sLORETA results, we performed a mixed effects regression on electrode activation. We assigned the linguistic cortical regions, as determined by sLORETA localization, a dummy value of 1, and we assigned the perceptual cortical regions, as determined by sLORETA localization, a dummy value of 2.

We used electrode activation as our dependent variable, and participant, item, and receptor as random factors. The reason we used individual receptors as random factors was to rule out strong effects that could be observed for one receptor but not for others within the regions commonly associated with linguistic or perceptual processing.

With this analysis, our objective was to determine to what extent linguistic or perceptual cortical regions overall showed increased activation throughout the trial. As in the previous analyses, F-test denominator degrees of freedom for the dependent variable were estimated using the Kenward–Roger’s degrees of freedom adjustment.For the semantic judgment condition, a significant difference was observed between linguistic and perceptual cortical regions, F(1, 1153108.58) = 46.70, p. (A) t-values for each of the 20 time bins for both the semantic judgment and iconicity judgment conditions.

Negative t-values represent a relative bias toward linguistic cortical regions, positive t-values represent a relative bias toward perceptual cortical regions. (B) t-values for each of the 20 time bins for both the semantic judgment and iconicity judgment conditions fitted using a sinusoidal curve model and correlation coefficients, standard errors, and parameter coefficients for the sinusoidal model, y = a + b × cos (cx + d). Negative t-values represent a relative bias toward linguistic cortical regions, positive t-values represent a relative bias toward perceptual cortical regions. To further demonstrate the neurological evidence for relatively earlier linguistic processes and relatively later perceptual simulation, we fitted the t-test values for the 20 time bins using exponential, power law, and growth models. The fit of the sinusoidal curve was superior to these models across the two data conditions. Figure B presents the fit, the standard errors, and the values for the four variables.

The sinusoidal fit converged in four iterations (iconicity task) and five iterations (semantic task) to a tolerance of 0.00001.Using the sinusoidal model and the parameters derived from the data, the following figure emerged (Figure B). For both the semantic judgment and the iconicity judgment conditions, linguistic cortical regions dominated initially, followed later by perceptual cortical regions. As Figure B clearly shows, activation in linguistic cortical regions dominated in the semantic judgment task, whereas activation in perceptual cortical regions was prominent in the iconicity judgment task. Moreover, linguistic cortical regions showed greater activation relatively early in the trial, whereas perceptual cortical regions showed greater activation relatively late in processing. The results from these analyses are in line with results we obtained through both more commonly used source localization techniques and RT analyses, but they give a more detailed view of relative cortical activation for linguistic and perceptual processes throughout each trial. DiscussionThe purpose of this experiment was to neurologically determine to what extent both linguistic and embodied explanations can be used in conceptual processing. The results of a semantic judgment and an iconicity judgment task demonstrated that both language statistics and perceptual simulation explain conceptual processing.

Specifically, statistical linguistic frequencies best explain semantic judgment tasks, whereas iconicity ratings better explain iconicity judgment tasks. Our results also showed that linguistic cortical regions tended to be relatively more active overall during the semantic task, and perceptual cortical regions tended to be relatively more active during the iconicity task. Moreover, on any given trial, neural activation progressed from language processing cortical regions toward perceptual processing cortical regions. These findings support the conclusion that conceptual processing is both linguistic and embodied, both in early and late processing, however when comparing the relative effect of linguistic processes versus perceptual simulation processes, the former precedes the latter (see also ).Standard EEG methods, such as ERP, are extremely valuable when identifying whether a difference in cortical activation can be obtained for different stimuli.

The drawback of these traditional methods is that excessive stimulus repetition is required. Moreover, ERP is useful in identifying whether an anomaly is detected or whether a shift in perceptual simulation has taken place , but does not sufficiently answer the question to what extent different cortical regions are relatively more or less active than others. The technique shown here used source localization techniques to determine where differences in activation were present during early and late processing. We then used that information to compare the relative effect sizes of two clusters of cortical regions over the duration of the trial. This method is novel, yet its findings match those obtained from more traditional methods (;; ). This method obviously does not render fMRI unnecessary for localization. In our analyses we compared the relative dominance of different clusters of cortical regions (filtering out their individual effects).

Such a comparative technique does not allow for localization of specific regions of the brain; it only allows for a comparison of (predetermined) regions.How can the findings reported in this paper be explained in terms of the cognitive mechanisms involved in language processing? We have argued elsewhere that language encodes perceptual relations.

Speakers translate prelinguistic conceptual knowledge into linguistic conceptualizations, so that perceptual relations become encoded in language, with distributional language statistics building up as a function of language use., ) proposed the Symbol Interdependency Hypothesis, which states that comprehension relies both on statistical linguistic processes as well as perceptual processes. Language users can ground linguistic units in perceptual experiences (embodied cognition), but through language statistics they can bootstrap meaning from linguistic units (symbolic cognition). Iconicity relations between words , the modality of a word , the valence of a word , the social relations between individuals , the relative location of body parts , and even the relative geographical location of city words can be determined using language statistics. The meaning extracted through language statistics is, however, shallow, but provides good-enough representations. For a more precise understanding of a linguistic unit, perceptual simulation is needed. Depending on the stimulus (words or pictures; ), the cognitive task (; current study), and the time of processing (; current study) the relative effect of language statistics or perceptual simulations dominates. The findings reported in this paper support the Symbol Interdependency Hypothesis, with the relative effect of the linguistic system being more dominant in the early part of the trial and the relative effect of the perceptual system dominating later in the trial.The RT and EEG findings reported here are relevant for a better understanding of the mechanisms involved in conceptual processing.

They are also relevant for a philosophy of science. Recently, many studies have demonstrated that cognition is embodied, moving the symbolic and embodiment debate toward embodied cognition. The history of the debate is, however, reminiscent of the parable of the blind men and the elephant. In this tale, a group of blind men each touch a different part of an elephant in order to identify the animal, and when comparing their findings learn that they fundamentally disagree because they fail to see the whole picture. Evidence for embodied cognition is akin to identifying the tusk of the elephant, and evidence for symbolic cognition is similar to identifying its trunk. Dismissing or ignoring either explanation is reminiscent of the last lines of a parable: “For, quarreling, each to his view they cling.

Such folk see only one side of a thing” (Udana, 6.4). Cognition is both symbolic and embodied; the important question now is under what conditions symbolic and embodied explanations best explain experimental data. The current study has provided RT and EEG evidence that both linguistic and perceptual simulation processes play a role in conceptual cognition, to different extents, depending on the cognitive task, with linguistic processes preceding perceptual simulation.

Emotiv Test Bench Manual Lawn Mower

Conflict of Interest StatementThe authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest. A., and Yaxley, R. Spatial iconicity affects semantic relatedness judgments. Reviewed by:, University of Pisa, Italy, Grand Valley State University, USACopyright: © 2012 Louwerse and Hutchinson. This is an open-access article distributed under the terms of the, which permits use, distribution and reproduction in other forums, provided the original authors and source are credited and subject to any copyright notices concerning any third-party graphics etc.Correspondence: Max Louwerse, Department of Psychology, Institute for Intelligent Systems, University of Memphis, 202 Psychology Building, Memphis, TN 38152, USA. E-mail: maxlouwerse@gmail.com.

Emotiv Test Bench Manual Lawn Care

Hello.I hope you can help me.I hope to do some neurofeedback tomorrow.I have 4 scenarios set up:1.Monitoring2.Acquisition3.Classifier-Trainer4.NeurofeedbackBut I have a problem. I cannot see the EEG signal.

(I have set up the driver for Emotiv. )I Initially can see the EEG signal in the monitoring scenario but then it disappears!I am using the Emotiv Headset.

I can see the EEG signal using the emotiv testbench.ANy ideas? How can I check if the driver is working correctly?I have attached a file showing the stages:Connecting using driverInitial EEG SignalCMD windowsScenario with module information.

Hi.My Temporal filters are as follows:ButterworthBand PassFilter order: 4Freq 4hz-20hzBand Pass Ripple: 0.5dbFor Signal DisplayTime scale: 10Display mode: scanManual Vertical Scale= trueVertical scale=20Does this sound ok?When I change the 'sample count per sent block' from 32 to 16 I got 2 minutes of EEG Signal displayed. Then it flatlined! Strange.I have made the simplest scenario with an acquisition client and a signal display.Sometimes I get strange EEG signal display which looks like barcodes.